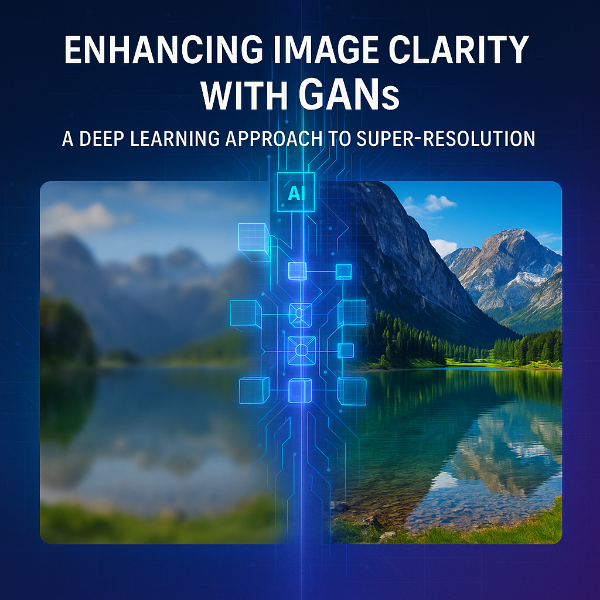

Enhancing Image Clarity with GANs a Deep Learning Approach to Super-Resolution

Abstract

This project implements an advanced image super-resolution model utilizing Generative Adversarial Networks (GANs). The model is designed to enhance low-resolution images and produce high-resolution, photo-realistic outputs by learning complex mappings from input-output image pairs. It uses a deep residual Generator network and a sophisticated Discriminator network to train against multiple loss functions—adversarial, content, and perceptual. The final system is integrated into a Flask-based web application for ease of use and is optimized with GPU acceleration and batch processing capabilities.

Introduction

Image super-resolution has become increasingly relevant in the fields of photography, medical imaging, satellite imaging, and surveillance. Enhancing low-resolution images into high-resolution versions that retain photo-realism and fine details is a complex challenge. Traditional methods using interpolation or CNN-based architectures often produce blurry or artifact-heavy outputs. GANs provide a promising alternative by training two networks in opposition—a Generator to create realistic images and a Discriminator to distinguish them from real high-resolution images. This adversarial training improves the Generator’s output quality significantly.

This project builds upon state-of-the-art GAN architectures with additional loss functions (content and perceptual) to ensure that the generated images are not only structurally accurate but also visually convincing. The use of residual blocks, skip connections, and VGG-based perceptual loss in the Generator network allows for deep learning of features and efficient upscaling. A comprehensive Flask-based web UI facilitates user interaction, allowing simple image upload and enhancement.

Problem Statement

Low-resolution images often lose critical detail, making them unsuitable for applications requiring clarity and precision. Current solutions either focus on increasing size without quality (interpolation) or fail to generalize well to diverse datasets. There is a strong need for an intelligent, deep learning-based model that can upscale images without losing their realism or essential features.

Existing System and Disadvantages

Existing Methods:

- Bicubic interpolation

- Shallow CNN-based SR models

- Traditional Super-Resolution CNN (SRCNN)

Disadvantages:

- Blurry and unrealistic output

- Inability to capture perceptual features

- Poor generalization to unseen data

- Limited image quality metrics

- No real-time or user-friendly interface

Proposed System and Advantages

Proposed Solution:

- Use of GAN-based architecture with VGG-based perceptual loss

- Deep residual Generator and Discriminator network

- Flask-based web interface for user interaction

- Use of PSNR, SSIM, and estimated MOS for evaluation

- Efficient training with model checkpointing and CUDA acceleration

Advantages:

- Generates high-resolution, perceptually realistic images

- Handles a wide variety of image types and sizes

- Improves performance with VGG perceptual loss

- Robust architecture with enhanced stability

- Easy-to-use web application interface

Modules

- Image Upload Interface

- Flask-based frontend for user image input

- Preprocessing Module

- Resizing, normalization, format conversion

- Generator Network

- Residual learning blocks with PReLU and pixel shuffle

- Discriminator Network

- Deep CNN with LeakyReLU for real vs fake classification

- Training Module

- Adversarial training with multiple loss functions

- Evaluation Metrics

- PSNR, SSIM, VGG perceptual comparison

- Result Rendering

- Display of enhanced high-resolution output to user

Algorithms :

- Generator: Deep residual network with skip connections and pixel shuffling

- Discriminator: Deep convolutional network with increasing feature maps

- Loss Functions:

- Adversarial Loss (Binary Cross Entropy)

- Content Loss (Mean Squared Error)

- Perceptual Loss (VGG19 Feature Loss)

Software Requirements

| Software/Package | Version/Type |

| Python | 3.9 |

| Flask | Web Framework |

| PyTorch | ≥1.8.0 |

| Torchvision | ≥0.9.0 |

| Pillow | ≥8.0.0 |

| NumPy | ≥1.19.2 |

| OpenCV | Image Processing |

| Jupyter Notebook | ≥1.0.0 (for training) |

Hardware Requirements

| Component | Specification |

| RAM | 16 GB or more |

| GPU | CUDA-compatible GPU |

| Processor | Intel i5/i7 or equivalent |

| Storage | Minimum 10 GB |

Hardware Requirements

| Component | Specification |

| RAM | 16 GB or more |

| GPU | CUDA-compatible GPU |

| Processor | Intel i5/i7 or equivalent |

| Storage | Minimum 10 GB |

Conclusion and Future Enhancement

Conclusion:

This project successfully demonstrates a high-quality image super-resolution system using GANs. The model leverages adversarial training and perceptual loss to produce enhanced images that are visually realistic and structurally accurate. Its integration into a web application makes it accessible to non-technical users as well.

Future Enhancements:

- Extend to video frame enhancement

- Implement multi-scale GANs for extreme super-resolution

- Integrate with mobile apps for real-time enhancement

- Explore lightweight models for edge devices

- Train with larger and diverse datasets for universal deployment

Reviews

There are no reviews yet.